As a Quality Engineering Manager, my mission is to revolutionize testing by fostering continuous feedback and process optimization. In this dynamic landscape, our focus extends beyond mere testing; it’s about creating a quick feedback system that instills quality in every level of our organization. Our goals have been –

• Swift Feedback System – Our teams collaborate seamlessly, sharing insights and learning from each iteration. This iterative approach not only accelerates development but also enhances the overall quality of our deliverables. By embracing agile methodologies, we ensure that feedback loops are tight, enabling rapid adjustments and improvements.

• Instilling a Quality Mindset – Quality consciousness isn’t limited to a select few—it’s a collective effort. From developers to testers, everyone plays a crucial role. We promote a culture where quality isn’t an afterthought; it’s woven into the fabric of our work. Regular training, workshops, and knowledge sharing reinforce this mindset.

• Agile + VSM = Data-Driven Decisions – Value Stream Mapping (VSM) provides us with a holistic view of our processes. By visualizing the end-to-end flow, we identify bottlenecks, waste, and areas for improvement. When combined with agile practices, VSM becomes a powerful tool for data-driven decision-making. We analyze metrics, identify patterns, and make informed choices to optimize quality and efficiency.

We leverage agile principles and VSM to elevate our quality standards and streamline production releases.

Background

Whether it is project management best practices as prescribed by PMI or agile manifesto or any other development method from waterfall to hybrid, they all prescribe to a simple recipe. Senior stakeholders want to stay informed about how downstream teams align with a larger objective. Middle management wants to have a better hold on resources, timelines, costs and budgets in order to ensure optimal utilization. In addition, they want to understand bottlenecks, quickly take corrective action by measuring metrics from the ecosystem.

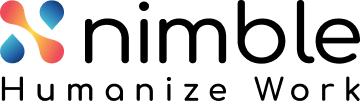

Junior members need focus and independence to work on their respective deliverables. They need feedback and insights into their work deliverables while they are within the context of the card being worked upon. Each person in the organization hierarchy needs a big picture for reference while gaining insights into a more localized work overview to make informed decisions. There is a diverse need and perspectives within an organization. To align these diverse groups let’s understand the drivers for data-driven decision-making –

Let’s dive in to see how we are practicing data-driven decision-making at NimbleWork with the help of these drivers.

Customer Focus

We follow Behavior Driven Development (BDD). It is a cohesive approach that prioritizes the discovery aspect of specifications, involving collaborative discussions between the business and delivery teams. Business alignment and customer focus is on the very nature of BDD.

The depth-first approach during ideation, may lead to information overload. It may not consider the broader business impact, undermining the ultimate goal. Delivery bandwidth is both valuable and limited. BDD helps us cut out the right minimum viable product (MVP) by setting up the upstream and downstream value streams.

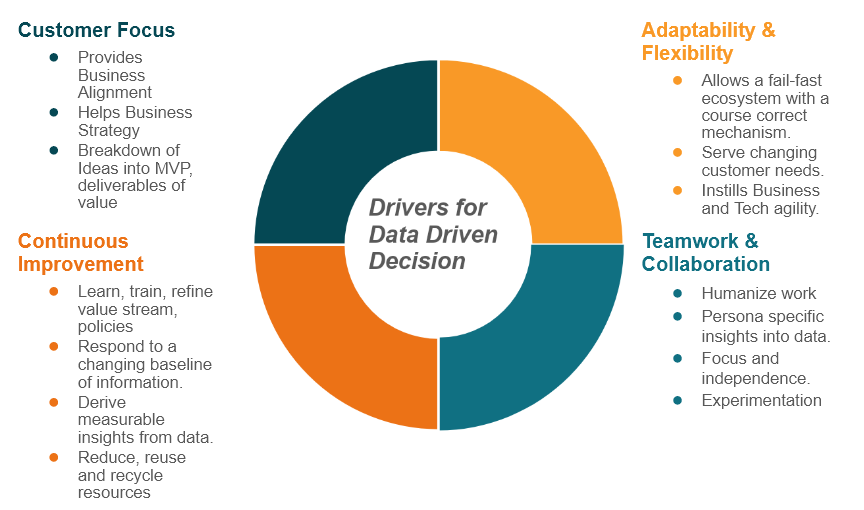

Epic Value Stream

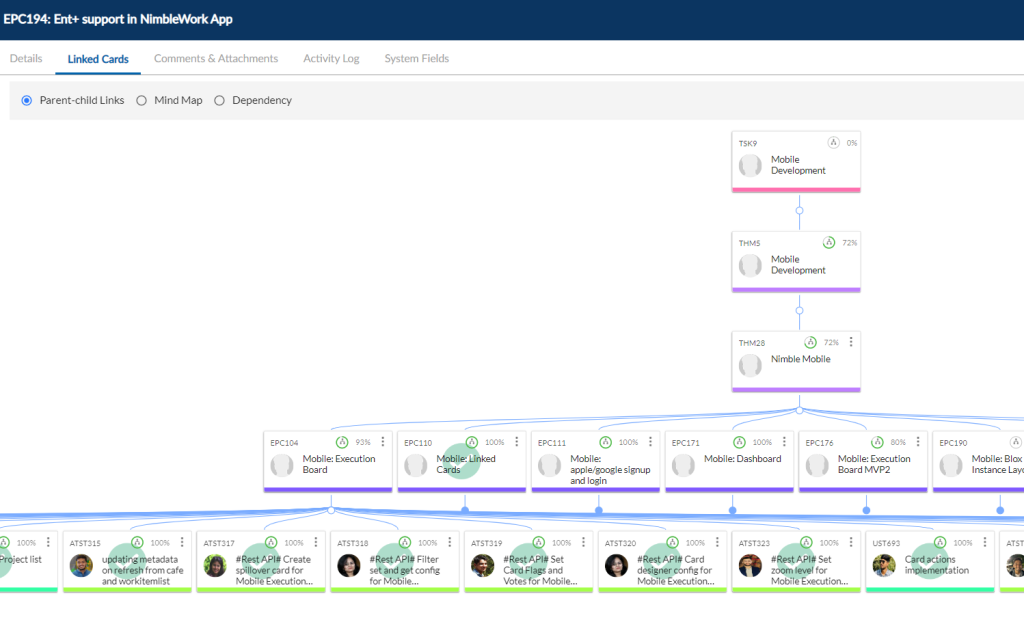

We begin with the breakdown of our organization’s vision and goals into top-level epics and themes. BDD and story mapping help us translate these ideas into valuable work items called cards. The overall epic completion gets auto-tracked using the card hierarchy. Cards progress roll-up bottom up. By eating our own dog food, Nimble enables us to easily track these Epics in “Epic Progress” value stream. Senior management stakeholders in our team maintain their focus on this very value stream.

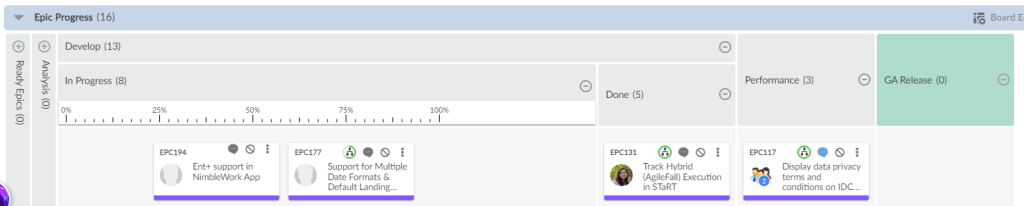

Story Grooming Value Stream

Product owners, technical & test leads follow “story grooming” value stream to make sure they take a 360-degree review of functional and non-functional requirements before delegating it for downstream implementation. This value stream saves rework that otherwise derails the downstream Thus, upstream quality gets effectively maintained.

Adaptability and Flexibility

We bank upon 4 pillars of agility to help teams adapt and improve productivity. BDD and agile methods go hand in hand. Clean engineering practices and DevOps provide the much-needed oiling to bring flexibility in the ecosystem. Here’s how we implemented it.

I will share a story around the development of one of the important business ideas that was recently delivered to production.

The business strategy team decided to increase product penetration in the SMB market. To achieve this, we chose to develop a mobile interface. After analyzing customer usage patterns, the product team prioritized key epics for downstream development in a user story map. Alongside mobile development, we also strategically focused on integration use cases for a sudden prospective customer. The product team quickly adapted by descoping wish list epics and redirecting downstream efforts where development had not yet begun.

Nimble provides real-world metrics data roll-up, allowing us to gain deeper insights into the upstream value stream. In another instance, we found that when card demos were conducted, the team could not get time for incorporating feedback, in addition to getting a lot of feedback on the UI/UX. The team brainstormed the idea of doing design review prior to card demos and updated the story execution value stream. There’s a lot to story execution that could be achieved when we focus on continuous improvement. Let’s see that example!

Continuous Improvement

Engineering discipline and DevOps culture go hand in hand. It not only provides adaptability but also flexibility to quickly rollback decisions which do not work. It empowers the team to experiment and adapt using the learnings. This provides a much-needed platform for teams to try out things without worrying about failing. Here are some of the best practices that we have adopted.

1. When a new microservice is initiated, we utilize Maven archetypes to ensure that the Git structure with architectural units and test automation structure are in place. This approach not only ensures that the team has a starting point, but also promotes consistency and standardization across the microservices. DEV-QA-UAT environments and pipelines are in place from day one.

2. Each microservice has a single responsibility. As a result, microservices are tested independently and promote code reusability, which in turn reduces the need for extensive test automation for each microservice.

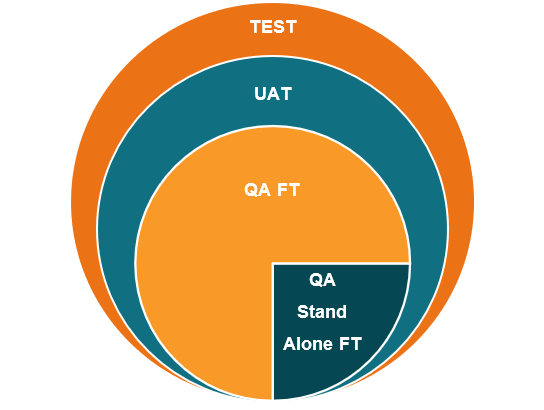

3. We have standalone functional inventories that care for microservice-specific behaviors. We use service virtualizations to mock upstream and downstream dependencies between services. Code does not get promoted to UAT if the unit and standalone functional test bed is not clean. The Test Pyramid is mandated.

4. API testing helps us ensure that the microservices communicate effectively and efficiently and reduce the need for extensive end-to-end testing.

5. Implementing CI/CD processes ensures that automated tests are executed quickly and reliably, which reduces the need for manual testing and maintenance efforts. This approach also ensures that any issues are identified and resolved quickly, which reduces the overall time and effort required for maintenance.

Plowing Back Metrics into VSM

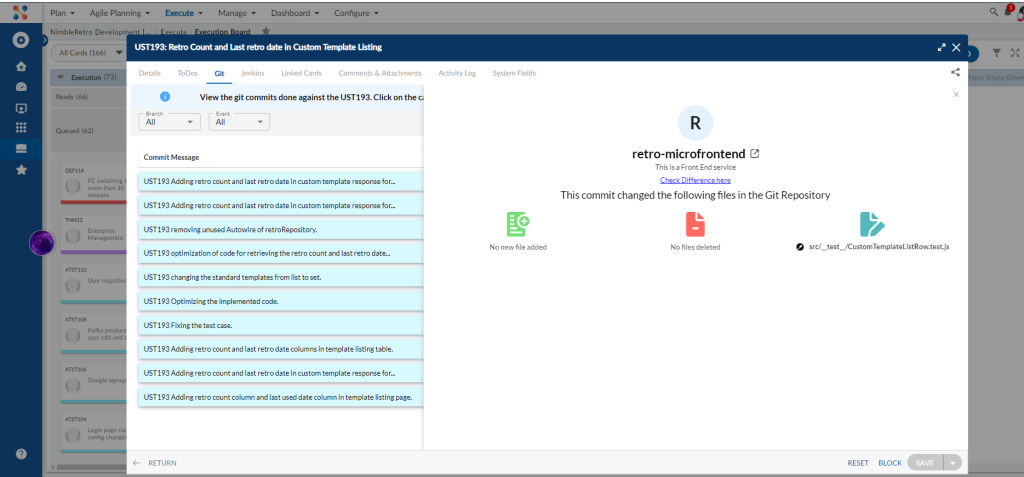

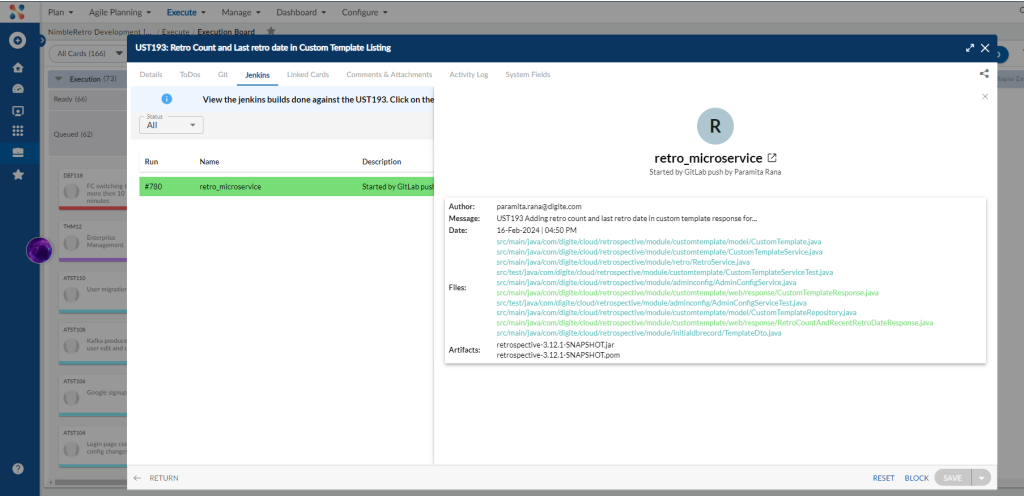

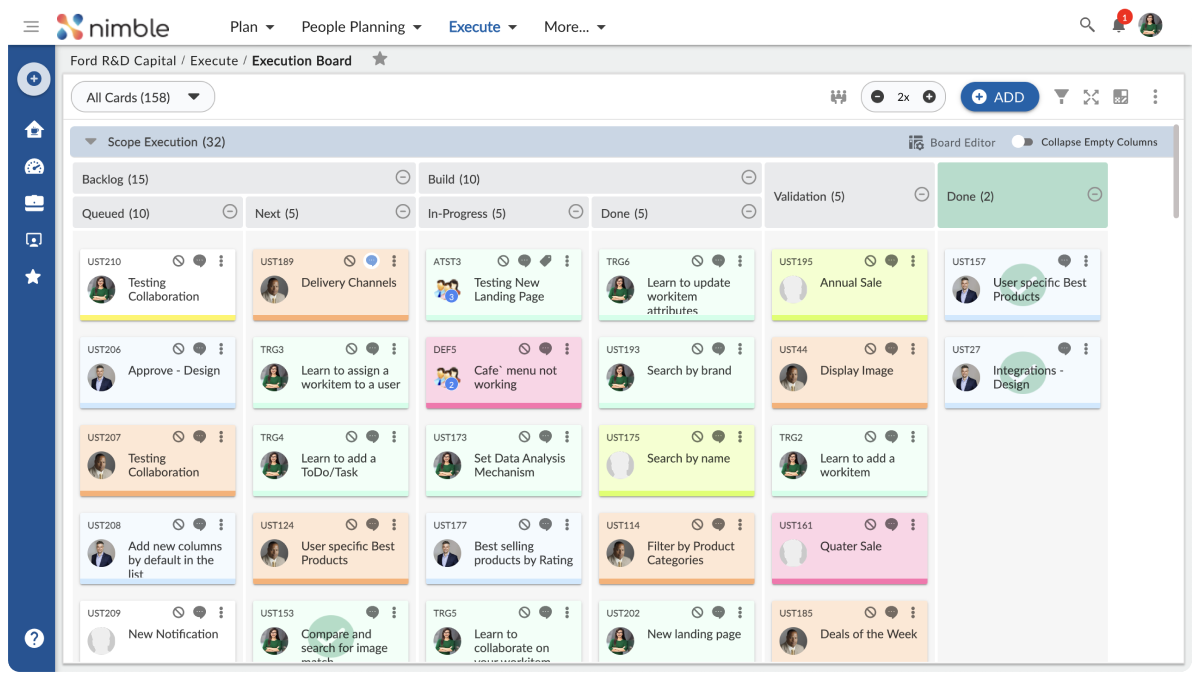

Middle managers need to keep a close eye on various metrics generated by the tools ecosystem pertaining to various asset repositories. For e.g. On commit GIT and Jenkins pipelines provide us insight into code quality using sonar and junit runs. Bundled with this information card on the execution board is not a mere placeholder but reflecting actual deliverable state.

Teamwork and Collaboration

Our downstream value stream is a great enabler of collaboration amongst the cross-functional teams.

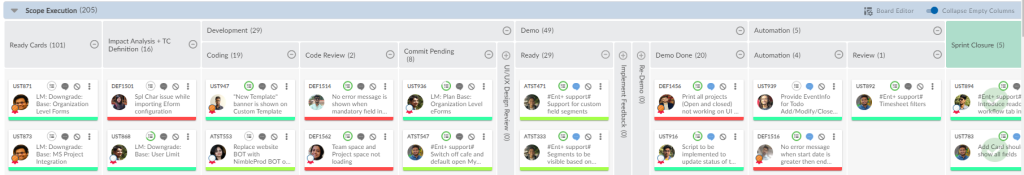

The downstream execution value stream shown above emphasizes BDD-TDD approach. The test case designing is prior to coding. It ensures that the test pyramid is always maintained. This enables fast running test cycles, which are much closer to the codebase. When dealing with large and disruptive user stories, testers work with developers to take a step-by-step approach and identify which aspects of the microservices are undergoing changes.

During the estimation process, they consider the effort required to port UX/UI refactoring and include it in the overall card estimates. As part of the scope execution value stream, we have a UI/UX/Doc review column after the coding, which helps this team engage earlier in the development process. They review if their part of work is correctly reflecting in the end deliverable prior to the demo. Till the time the card reaches demo stage, it is holistically completed.

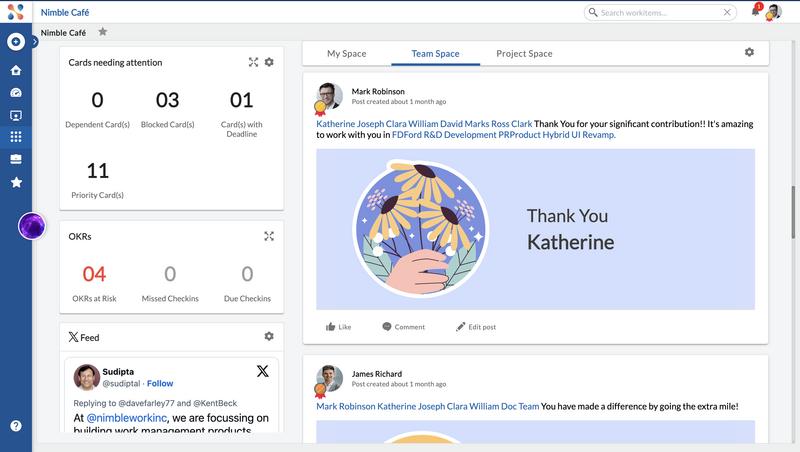

Our teams collaborate, appreciate, give kudos and manage their work items using our very own Café. It also helps cross functional teams stay informed about deadlines and any expedited work items.

The Advent of AI/ML

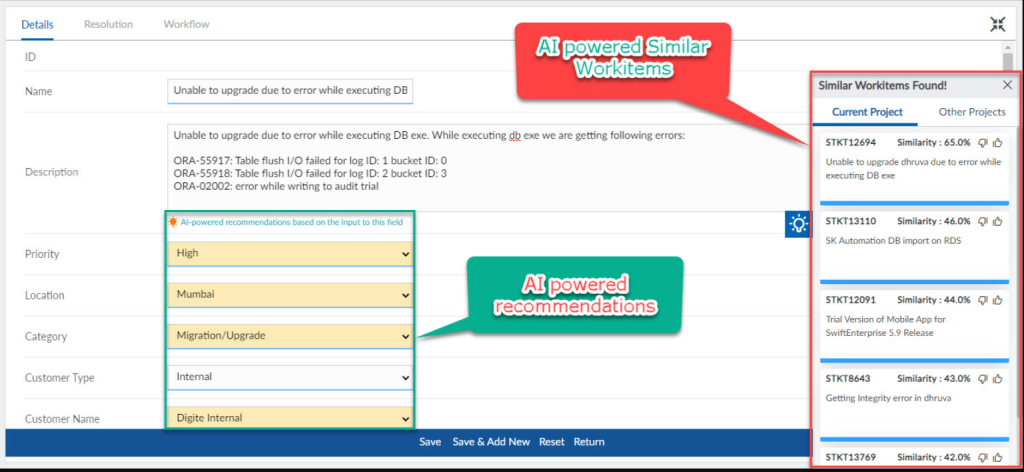

We capture large volumes of real-time data that includes project-specific details , user-stories with acceptance scenarios and defect cards with root cause & impact analysis, resources and their skillset, availability, and working pattern. We bank upon Nimble to derive the right perspective and intelligent insights.

These productivity boosters help us develop quick insights into similar items of the past, along with recommendations and guidelines. The more the data, the better the outcome. With large volumes of historical data, intelligent prediction can be suggested in real-time. LLMs support in Nimble chatbot adds an additional dimension to find required resources more easily than ever before.

Test automation tools enabled with intelligent solutions play an important role in reducing maintenance and improving automation development productivity. There are multiple tools in the market we have explored and some of them we use in the mainstream like selenium coupled with Helenium and Sahi Pro.

Conclusion

Data-driven approach provides us valuable insights to improve software quality throughout the entire development lifecycle. Use of AI-powered recommendations helps identify areas for improvement and prioritize actions easily. Value streams are integrated with a variety of tools in the development ecosystem for a holistic view of quality data. This encourages a culture of data-driven decision-making within our team to leverage insights effectively. By combining these strategies, we transform the quality engineering process from reactive to proactive, ensuring exceptional quality with every release.