Data science is not just about solving business problems mathematically but it is also about telling a story to stakeholders. It is a joy when one can draw out the “OOHs” and “AAHs” as mental bulbs warmly glow into existence as the results of an analysis are understood. More often than not, such storytelling is not possible when one bakes algorithmic outputs into products.

Having worked on “data-science products” throughout my career, I have often found that the sale of such products goes through when the product can guide the user through the story of the analyses it presents. But how does one get the personified product to explain the results of a multidimensional model, having a multimodal decision boundary, to a business user? Enter XAI (eXplainable Artificial Intelligence).

Touted as the third wave of AI, it was ‘jargoned’ into existence to reduce the possibility of justifying consequential decisions using “the algorithm made me do it”. The gravity of the problem comes to the fore as in the case of Microsoft’s Twitter bot – Tay which learned to spout racist tweets ).

(Why the third wave of AI? – refer to this interesting video by John Launchbury under the aegis of DARPA)

Algorithms are becoming ubiquitous and their outputs are being used by the society at large and without understanding their inner workings. How does one prevent bias from creeping into algorithms? If it does creep in, how does one find out and nip the problem in the bud without waiting for the algorithm to start actively discriminating or giving unintended results? The folks over at FAT-ML(Fairness Accountability and Transparency in Machine Learning) have taken up this noble cause.

While my reason of “increasing data science-product sales” may not be as noble, the use of XAI to explain algorithmic results to the ‘humans-in-the loop’ is completely in alignment with the aforementioned group.

Where does the eXplainability come from?

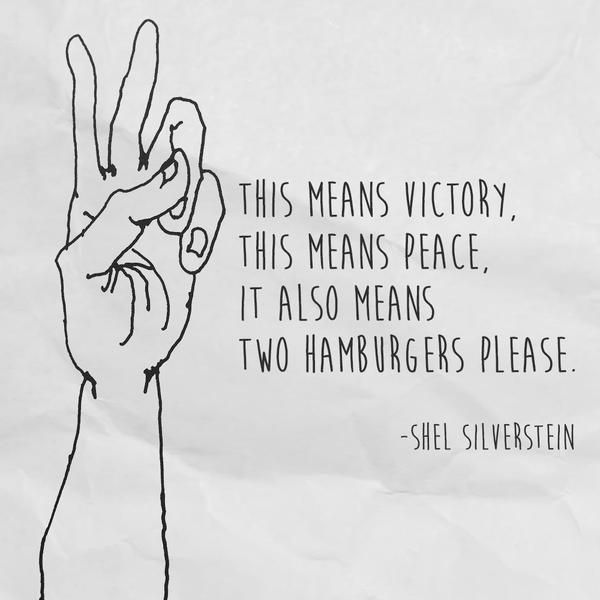

Img Courtesy: Shel Silverstein

The explainability in XAI comes from a set of techniques that add contextual adaptability and interpretability to machine learning models. I would combine the two terms and call it “contextual reasoning”.

There is no formal mathematical definition for this term, but one may say that it is the ability to come to a conclusion from concepts (like a human) rather than by just using probability.

For example, if one were asked to assign a reason why two fingers (like in the picture alongside) have been raised – A human may assign a reason looking at the burger joint in the background or the black and white photos of soldiers grinning on the battlefield.

An algorithm, on the other hand, would calculate the probability distribution of what the two raised fingers mean from a large collection of training photographs of people holding up the “v”-sign from the internet and then associate a reason based on this distribution.

So over a large number of cases, the algorithm may prove correct, but there would come a case of a soldier holding up the same sign at a burger joint that could cause problems because the algorithm would get confused because it associates soldiers holding up signs to victory.

If a human were to understand that the algorithm was biased by the large number of world war II photographs on which the model was trained, then he would make an effort to circumvent such conclusions.

In fact, this would also help the data-scientist who trained the model, pointing him towards the need to de-bias the training photographs. The story does not end here however. Hoping that my readers have understood contextual reasoning, I bifurcate context into two subtypes – local context and global context.

Here is another example that illustrates the difference between the two. The highest temperatures ever recorded may not be worrisome in the middle of the Sahara desert (local context) but is definitely concerning if it is so across the globe (global context ….yes I did that).

The set of techniques under XAI can be grouped by the kind of contextual information they provide. By no means are these techniques new. Some of them like “global feature importance” have been around for quite a while, but it is certainly new that data scientists can combine these techniques to enable an algorithm explain itself in their absence.

In the next part of this blog series, I will delve into the kind of techniques covered under XAI. Meanwhile, check out RISHI-XAI – The World’s First XAI (eXplainable AI) Product for Enterprise Project Intelligence from Digité.