Over the last few years of steady-state usage of Kanban in our product management and engineering teams, we have achieved a number of dramatic improvements. Even considering that for us, it was – and is – a case of eating our own dog-food, these improvements are nothing short of incredible for a small product company where every minute, every dollar, every feature that is value to a customer, counts! So, we thought we should share our experience and benefits with the software and IT community in the hope that some of these will help you benefit as much or more than what we have been able to do. This post is the first in this series.

Time to Market – A Critical Success Factor!

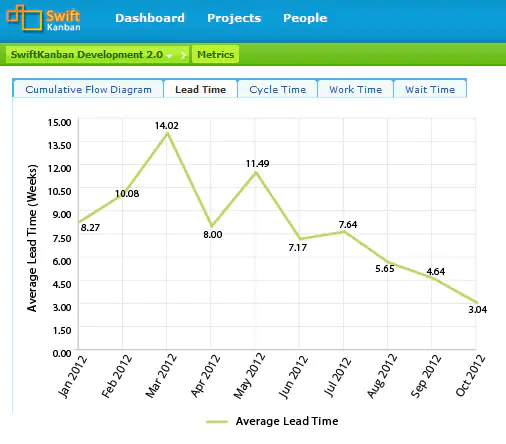

Within the SwiftKanban development team, we have experienced a dramatic reduction of Cycle Time last year. This blog explores the obvious and the not-so-obvious factors that have helped us accomplish this. The chart below shows our performance in terms of average cycle time over the last 12 months, when average cycle time reduced from about 12 weeks to about 3 weeks.

Some Background First!

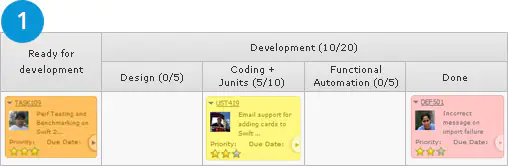

Like I mentioned earlier, we started the “Kanbanization” of our Dev team in Jul 2011. The first 6 months were spent training the team and on-boarding the team with a basic understanding of the Kanban concepts, the Standup Call process, getting used to collaborating on the board, etc. We did several iterations of the value stream design trying to reduce the WIP and figuring out where our buffer lanes should be to enable “pull”. It wasn’t obvious to us then that buffer lanes should be where work is going to hand off from one person to another! In another such iteration, we split the requirement definition process into a separate lane. Since then, our Value Stream has stabilized and for the last 6+ months looks, it like this:

The results – and the benefits – have been dramatic. For one, over the last 10-12 months, we have experienced a Cycle Time reduction of over 300%! So we decided to dig deeper to find out why this was the case.

Some Obvious Reasons

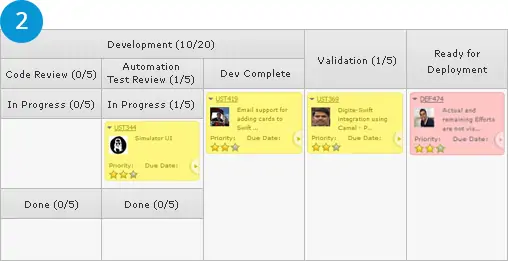

#1: Bottleneck lane – As the value stream and flow stabilized, we realized that all our user stories were piling up at “Test Automation Review” lane. Test Automation was new to this Dev team. A new tool (Sahi) was being used. Therefore, it was initially felt that we need to let the new automated code get reviewed by our expert (and we had only one of them). We recognized this and let it continue for a quarter till we were comfortable that the team had an acceptable level of competence. Once this was accomplished, we moved from:

reviewing all automation scripts → doing a sample review → complex user story automation review.

Next, to reduce this bottleneck further, we allocated one more person to start reviewing once we had the confidence that he would be an effective reviewer (review is not a comfortable role for many –having to find issues in your peers’ work is not a welcome thought for many!).

#2: Multitasking – We had one team that used to take up too many cards because they were simply too excited to get started on all the new ideas that were coming up. This is a personality aspect and it takes time to correct your natural instinct to jump on to the next new exciting idea (before completing the earlier one) resulting in high WIP and WIP limit violations. Over time, we observed and controlled this phenomenon

#3: Large Cards – Some cards were simply too large! Our hierarchy feature and our Enterprise Board feature were not done early on last year. We did not have a card hierarchy implementation available in the product… and we thought that just identifying the card as a XL size was adequate. Large cards created another problem – because coding was being done on one development branch, we could not release many smaller user stories that were ready to go. This helped us evolve the engineering process to define local branches for large cards within SVN and merge them to the main development branch when done.

Further, we started emphasizing and reiterating the need to break large cards into smaller user stores that could be released for functional review. This is not the natural human instinct – a developer’s natural instinct is to release something only when you feel that it is functionally complete. Further, people coming from Gantt Chart tracking world are naturally tuned to breaking tasks (not scope decomposition). The “pending feature” is just incrementally a little more effort. So, someone has to continuously cajole the team to let it go as long as what was being released was end-user-demonstrable.

Some Not So Obvious Reasons

#4: Scope creep – It took us some time to realize that this was happening with many cards. Since we moved away from the earlier process of definition of a use case document by product management and handover to the Dev team, the requirement would keep “evolving”. The UI would keep changing. Interestingly, this problem was more with the Dev team that was collocated with the Product Manager (in Bangalore). This issue was less for the user stories that were being worked by the remote (Mumbai) team because there had to be more clarity before the remote team could start working on a feature. Collocation was counter-productive! So, we had to consciously focus on limiting the “current scope” of the user story and move the follow-up work to a follow-up user story.

#5: R&D Cards – Many user stories need a lot of technology learning! This was the case with many of our Large Cards. This continues to our challenge even today. We start work thinking a user story is simple enough to implement, though we know it is new for us. Suddenly, we realize that in no time, we have spent over a month evolving how we work with this new technology. In other cases, where we know it will take time, our policy now is to do the R&D under a specific “Engineering Task” (a different card type) and once we have figured out the technology, we begin working on the main user story. Overall, this has helped us achieve better work organization and expectation management, and better consolidation of real R&D work which impacted multiple cards, and better cycle time reporting.

#6: Repetitive tasks across user stories – We learnt this the hard way! Let me explain this with a real example. We needed 30+ webservices so that we can integrate SwiftKanban to a broad suite with tools. Due to the skillset of our team members, we asked one guy to do all the development of web services, another person to all the unit test automation for these webservices, another person to do all the functional automation for these webservices. As a result, this became a huge card of 30+ webservices that moved together from one stage to another. Ideally, each webservice could have been incrementally rolled out to production. A better approach would have been to break the inventory of 30+ webservices in 5-6 batchs of 6-5 webservices so that the average cycle time for each would have been around the 2-3 weeks. More importantly, we would have achieved a higher throughput! We have become better at batching since then!

The 6 above factors have directly impacted our average Cycle Time performance. In addition, during the last 18 months, many (policy) decisions were taken (some documented and some not), which have also helped.

It would be great if you can share your experience and suggestions on making changes to your Kanban systems to achieve better lead/ cycle time performance!

Happy “Kanbanization”!